Chase Goddard

PhD student at Princeton University

I’m Chase Goddard, a PhD student in the physics department at Princeton University advised by David Schwab and Bill Bialek. My research has focused on generalization and algorithmic capabilities of modern machine learning methods, both in and out of distribution. I am particularly interested in what ingredients (e.g. pretraining data, model architecture, optimization strategy) are necessary for these capabilities to emerge. I’ve also taught several courses at the graduate and undergraduate level.

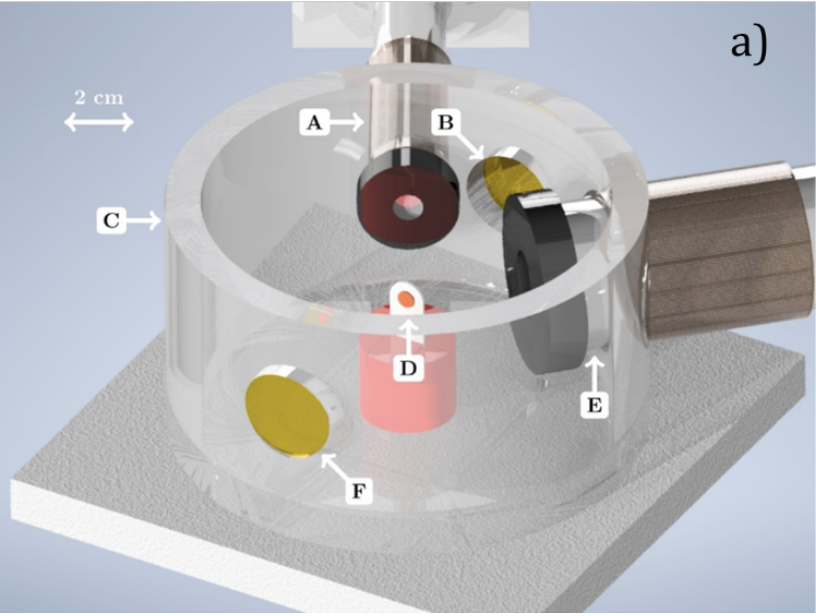

Before Princeton, I majored in physics and computer science at Cornell University, where I worked with Carl Franck on X-ray spectroscopy and with Julia Thom-Levy on the Compact Muon Solenoid (CMS) experiment at the Large Hadron Collider. I also held an internship at Boston Consulting Group’s Henderson Institute, where I worked on a data analysis project that contributed to the Fortune Future 50 ranking.

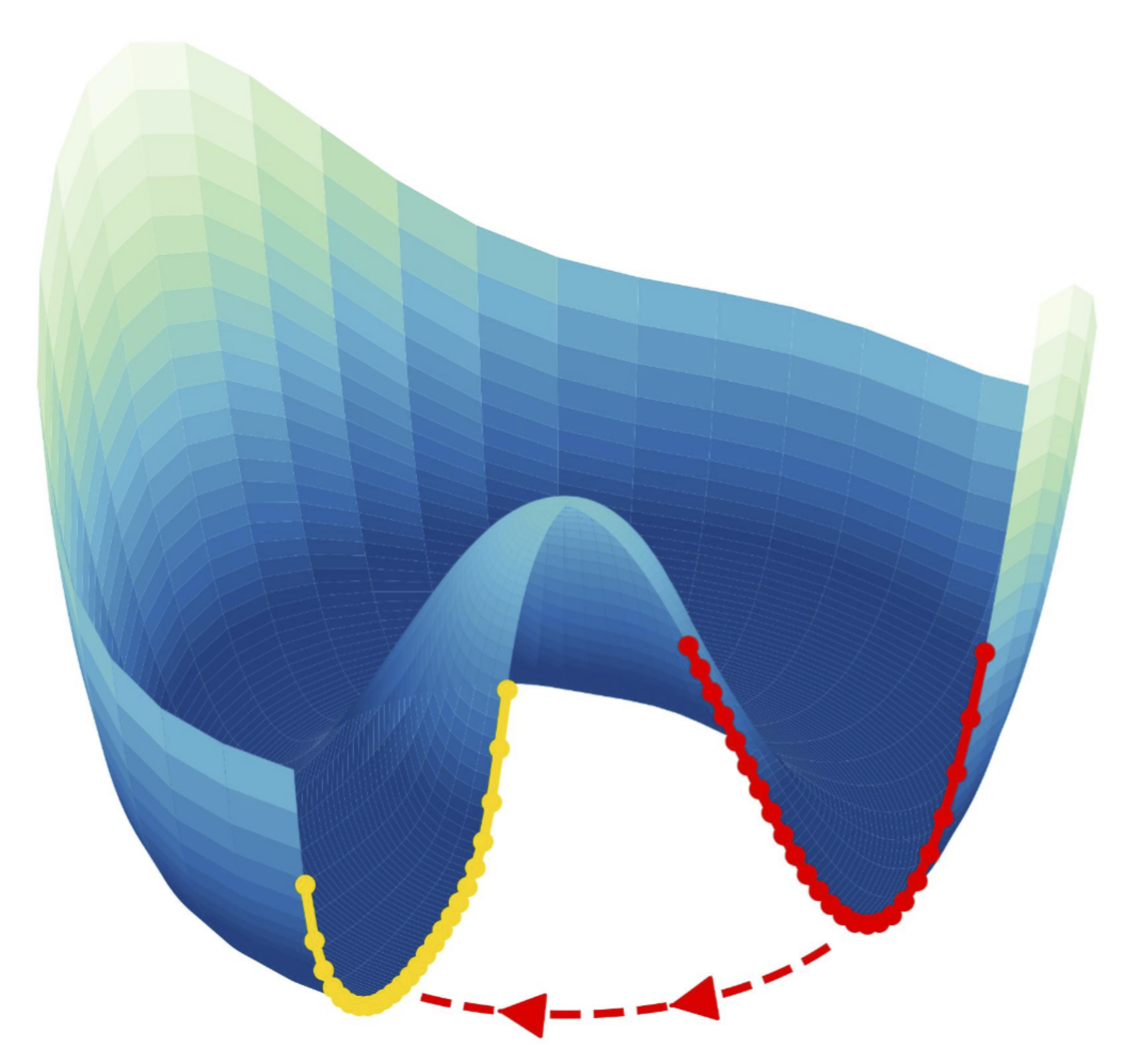

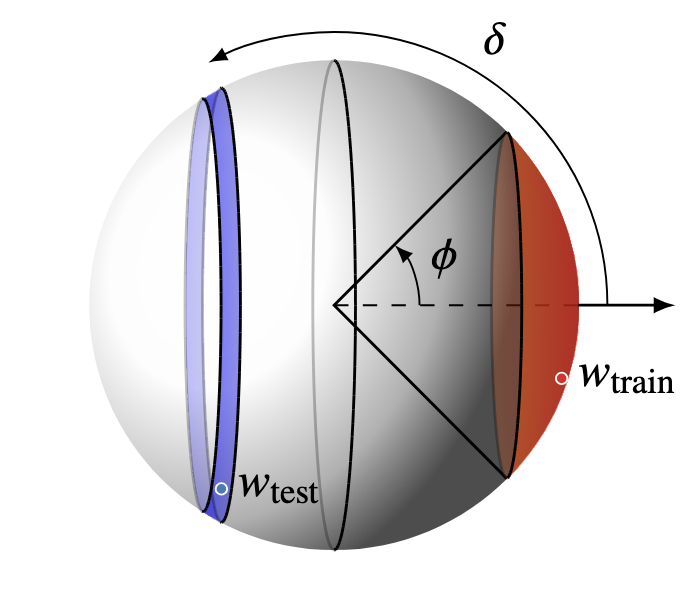

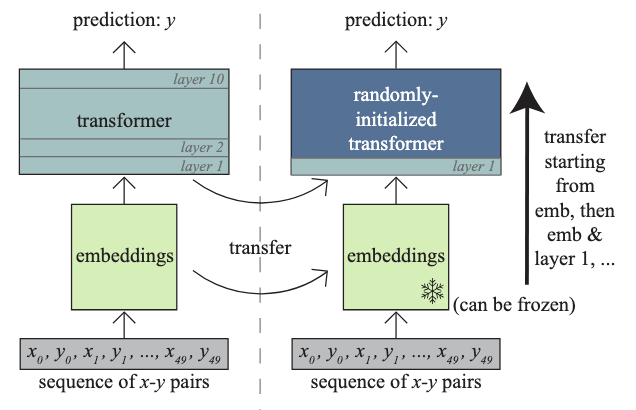

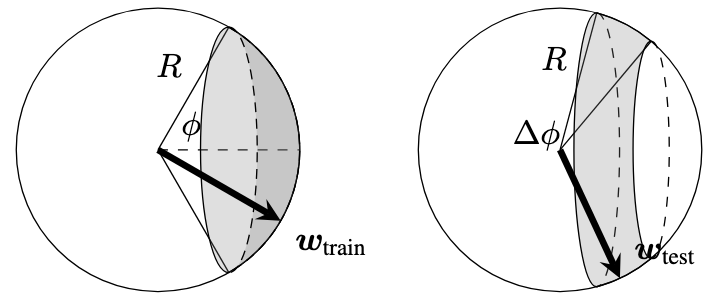

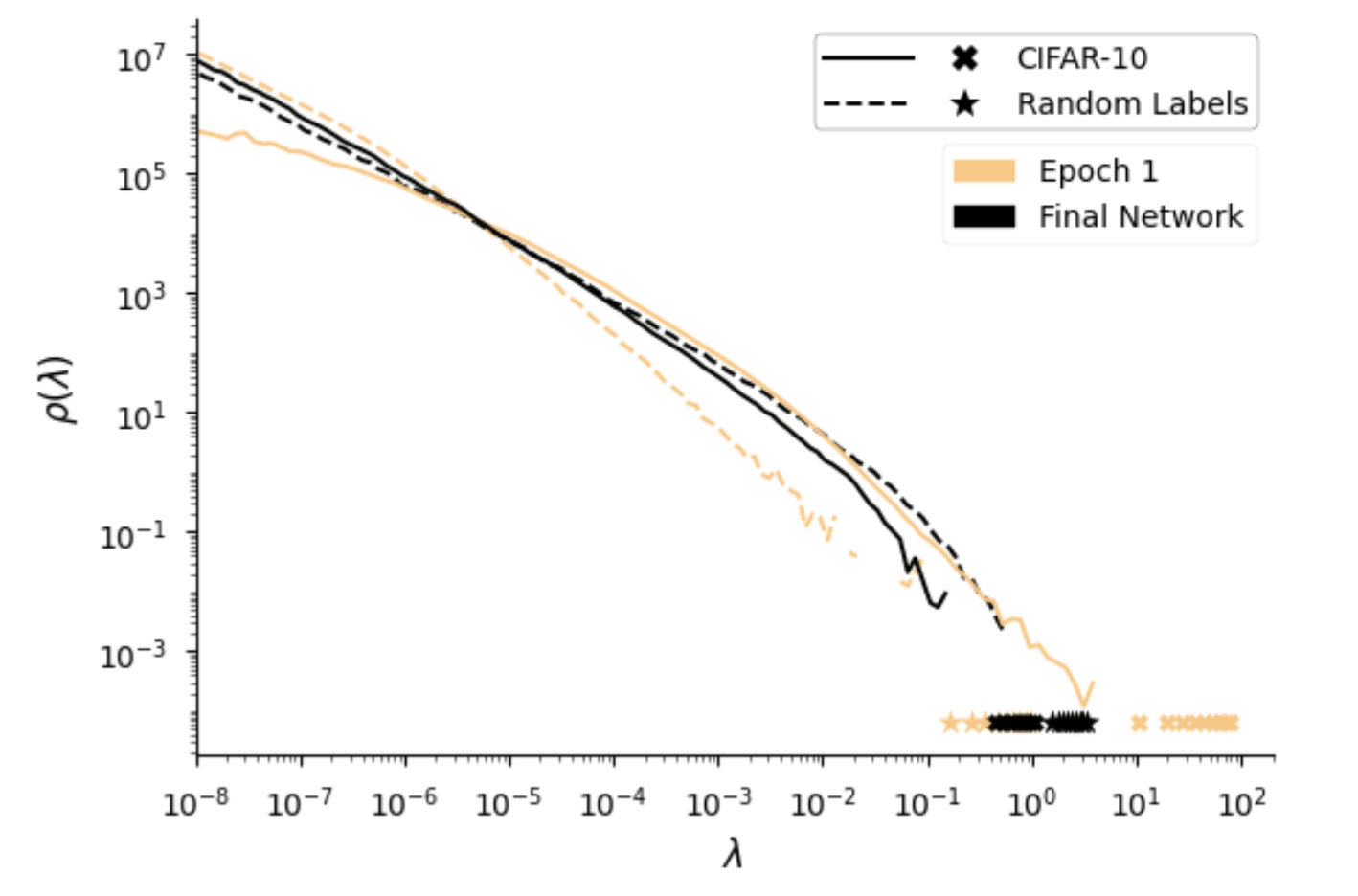

I am currently working on characterizing & improving reasoning in LLMs via reinforcement learning, understanding generalization & mode connectivity by investigating global geometric properties of the loss landscape of overparameterized models, and extending our analysis of out-of-distribution generalization in transformers to richer domains. Stay tuned!

For an up-to-date list of my work, see my Google Scholar, or see below.

Publications

2026

2025

- Preprint

Optimization and variability can coexistUnder review, PNAS, May 2025*Authors in alphabetical order.

Optimization and variability can coexistUnder review, PNAS, May 2025*Authors in alphabetical order.